Architecture and Security

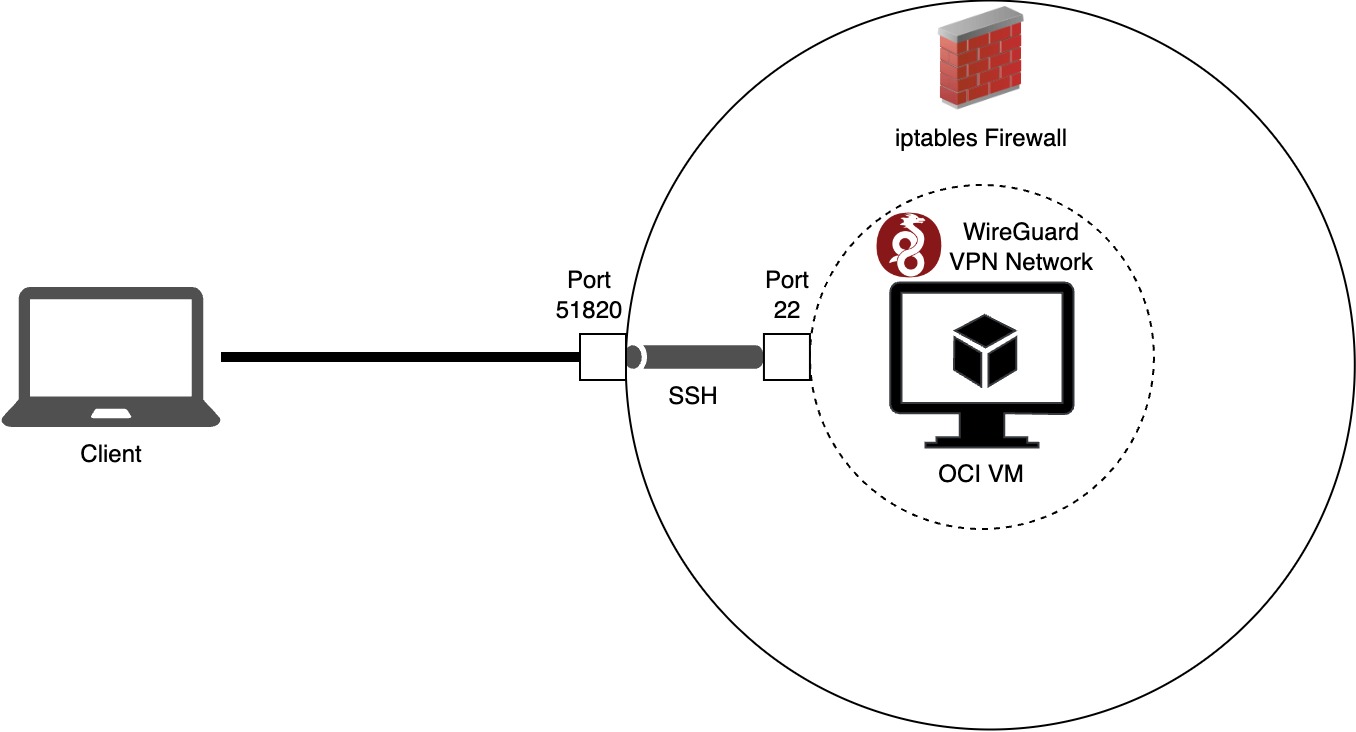

It is time to think about the architecture and security aspects of our current setup. Learning how to create a VM with Terraform and configure it with Ansible is nice but once you want to use the system productively, you have to make sure that it is secure and doesn't allow unwanted access to unwanted actors. That's where VPNs, firewalls and jumphosts come into play and force you to think about your networking architecture:

- VPN: A virtual private network (VPN) is known by many of us to access private corporate networks or circumvent geoblocking of certain services in the public internet. Similar to a private corporate network, a VPN can also be used by you to create your own private network and make the machines inside the network accessible only to trusted devices.

- Firewall: Firwalls controll incoming and outgoing traffic to and from a system (also callen ingress and egress). While there are many different types and characteristics of firewalls and marketing departments never shy of inventing new 🐍-oil, the basic goal is to allow or reject specific traffic. This can be based on the source of the traffic (like IP addresses), the protocols being used (e.g. ICMP, TCP, UDP) or the destination of a request (e.g. port 22 for SSH or ports 80/443 for HTTP/HTTPS).

- Jumphost: No, a jumphost is not a trampoline park for the birthday party of your children. Rather it is a special server (also called jump server) that connects two or more devices in different security zones to each other. It often uses SSH and firewalls or VPNs to access a secure system from within an unsecured environment.

How does this all fit together now? We chose the GCP VM as the productive system. The productive system should only be accessible by a single machine - our jumphost. The jumphost itself should only be accessible from within the VPN. To make this happen:

- A VPN server needs to be installed on the jumphost. I chose WireGuard in this example because it is lightweight, fast and (relatively) easy to configure.

- A firewall needs to only allow traffic on udp/51820 of the public IP address of the jumphost. All other ports or other protocols should be closed down in order to decrease the exposure to the public internet.

- Since port 22 is closed aswell, no SSH connection will be available to the jumphost anymore if the client is not connected to the VPN. And since the VPN settings can be pre-configured, only a limited amount of devices can theoretically connect to the jumphost.

- The ssh-config needs to be configured in order to forward the agent to the second VM hosted by GCP.

- Configuration of the second target VM

The rest of this article will guide you through the process of realizing the goals mentioned previously.

1. Installation of the WireGuard server on the jumphost and a WireGuard client on the local system (my laptop)

Installing WireGuard both on the server and client (which turned out to be a Ubuntu server and a MacOS client in my example) is better explained by Jay Rogers in this article. I will be providing you with an Ansible script in a minute but before that, let's discuss the configuration files of server and client. Server

[Interface]

PrivateKey = ***server_private_key***

Address = 10.10.0.1/24

ListenPort = 51820

[Peer]

PublicKey = ***client_public_key***

AllowedIPs = 10.10.0.4/32WireGuard creates a public and private key pair for both server and client, which means that you have two keys on each machine and need at least four in order to establish a connection. Don't get confused!

[Interface] describes server settings and requires its private key and defines an IP address that it is listening on. Make sure that this addresses are not used by other devices in the same network already. You can specify a port or leave it as the default 51820 for VPN connections.

Now [Peer] can be a recurring entry of one or multiple peers that are allowed to connect to the VPN server. You need the public key and its assigned IP address for each device that you want to grant access to the virtual private network.

Client

[Interface]

PrivateKey = ***client_private_key***

Address = 10.10.0.4/32

[Peer]

PublicKey = ***server_public_key***

Endpoint = vpn.paulelser.com:51820

AllowedIPs = 10.10.0.0/24Now on the client side, the config is almost reversed to the one of the server. Here in the [Interface] we use the client's private key and the assigned IP address that was called AllowedIPs in the server config.

The [Peer] section now has one or more VPN networks that the peer could connect to. It needs the server's public key and an Endpoint to connect to. The Endpoint can either be an IP address or a FQDN that will be resolved by a DNS server later on. Use the same port that you set earlier aswell.

[AllowedIPs] specifies the range of IP addresses that should be routed to the server. In this example, traffic for the subnet 10.10.0.x (meaning 10.10.0.1 up to 10.10.0.254) will take the WireGuard VPN tunnel. This allows us to not only reach the server but also other potential devices in the same VPN from within the client.

This can all be done automatically in an Ansible script of course. It is a bit complicated because it first installs everything on the wg_server host, afterwards on the wg_client host but then does additional settings on the wg_server host again. The reason is that the client_public_key can only be added to the server config after it has been created, of course.

---

- name: Setup WireGuard Server

hosts: wg_server

become: yes

tasks:

- name: Install WireGuard on Ubuntu server

apt:

name:

- wireguard

- wireguard-tools

- resolvconf

state: present

update_cache: yes

- name: Enable IP forwarding on the server

sysctl:

name: net.ipv4.ip_forward

value: '1'

sysctl_set: yes

state: present

reload: yes

- name: Generate private key for WireGuard server

command: wg genkey

register: server_private_key

- name: Save the private key to a file

copy:

content: "{{ server_private_key.stdout }}"

dest: /etc/wireguard/server_private_key

mode: 0600

- name: Generate public key for WireGuard server

ansible.builtin.shell: "cat /etc/wireguard/server_private_key | wg pubkey"

register: server_public_key

- name: Save the public key to a file

copy:

content: "{{ server_public_key.stdout }}"

dest: /etc/wireguard/server_public_key

mode: 0600

- name: Create WireGuard configuration file on the server

copy:

content: |

[Interface]

PrivateKey = {{ server_private_key.stdout }}

Address = 10.10.0.1/24

ListenPort = 51820

dest: /etc/wireguard/wg0.conf

mode: 0600

- name: Install nftables

apt:

name: nftables

state: present

- name: Create nftables rules file

copy:

content: |

#!/usr/sbin/nft -f

table ip filter {

chain INPUT {

type filter hook input priority 0; policy accept;

udp dport 51820 accept # Allow UDP traffic on port 51820 for WireGuard

}

}

dest: /etc/nftables.rules

owner: root

group: root

mode: '0644'

- name: Apply nftables rules

command: nft -f /etc/nftables.rules

- name: Ensure nftables rules are applied on reboot

lineinfile:

path: /etc/rc.local

state: present

create: yes

line: 'nft -f /etc/nftables.rules'

- name: Ensure rc.local is executable (for Debian/Ubuntu)

file:

path: /etc/rc.local

mode: '0755'

state: file

when: ansible_distribution in ['Debian', 'Ubuntu']

- name: Set server information as facts

set_fact:

wg_server_public_key: "{{ server_public_key.stdout }}"

wg_server_ip: "{{ ansible_host }}"

- name: Setup WireGuard Client

hosts: wg_client

connection: local

become: yes

vars:

server_public_key: "{{ hostvars[groups['wg_server'][0]]['wg_server_public_key'] }}"

server_ip: "{{ hostvars[groups['wg_server'][0]]['wg_server_ip'] }}"

tasks:

- name: Check if WireGuard is installed on macOS

shell: wireguard-go --version

ignore_errors: yes

register: wg_installed

- name: Install WireGuard on macOS via Homebrew (if not installed)

shell: brew install wireguard-tools

when: wg_installed.rc != 0 and ansible_facts['os_family'] == 'Darwin'

- name: Generate private key for WireGuard client

command: wg genkey

register: client_private_key

- name: Save the private key to a file

copy:

content: "{{ client_private_key.stdout }}"

dest: /etc/wireguard/client_private_key

mode: 0600

- name: Generate public key for WireGuard client

ansible.builtin.shell: "cat /etc/wireguard/client_private_key | wg pubkey"

register: client_public_key

- name: Save the public key to a file

copy:

content: "{{ client_public_key.stdout }}"

dest: /etc/wireguard/client_public_key

mode: 0600

- name: Create WireGuard configuration file on the client

copy:

content: |

[Interface]

PrivateKey = {{ client_private_key.stdout }}

Address = 10.10.0.4/32

[Peer]

PublicKey = {{ server_public_key }}

Endpoint = {{ server_ip }}:51820

AllowedIPs = 10.10.0.0/24

dest: /etc/wireguard/wg0.conf

mode: 0600

- name: Check if WireGuard is running

command: wg show

register: wg_running

failed_when: false

changed_when: false

- name: Bring up WireGuard interface if not running

command: wg-quick up /etc/wireguard/wg0.conf

when: wg_running.stdout == ""

- name: Restart WireGuard to apply latest configuration if running

shell: wg-quick down /etc/wireguard/wg0.conf && wg-quick up /etc/wireguard/wg0.conf

when: wg_running.stdout != ""

- name: Set client's public key as a fact

set_fact:

wg_client_public_key: "{{ client_public_key.stdout }}"

- name: Adapt WireGuard server config file

hosts: wg_server

become: yes

vars:

client_public_key: "{{ hostvars[groups['wg_client'][0]]['wg_client_public_key'] }}"

tasks:

- name: Add client's public key to server configuration

lineinfile:

path: /etc/wireguard/wg0.conf

insertafter: EOF

line: |

[Peer]

PublicKey = {{ client_public_key }}

AllowedIPs = 10.10.0.4/32

- name: Start and enable WireGuard on the server

systemd:

name: wg-quick@wg0

enabled: yes

state: restarted # ensure that the service is restarted2. Troubleshooting: OCI Security Lists and nftables rules

OCI lets you create a virtual cloud network (VCN) that can contain security lists. In those one can add ingress and egress rules for traffic to and from the VM. This works as a firewall that allows and disallows connection on certain ports, protocols or ranges of IP addresses. The Ubuntu images are using iptables as their firewall. Since iptables is a bit outdated, I chose to switch to the newer nftables.

Even with appropriate VCN firewall settings I was having difficulties connecting the VPN client to the server on udp/51820. After a couple of fruitless bugfixing attempts, this Reddit article finally led me into the right direction. Nftables rules have a descending precedence meaning that the first rule has the highest order and can overrule following rules if specific enough. However, OCI implements a REJECT all rule at the end of nftables. This means that if not allowed by previous rules, all other traffic will be rejected by default as a means to enhance the VM's security. A problem arrises if user-specific rules added to the security lists of the VNC get appended to the nftables rules below the REJECT all rule: they never have any effect on the firewall and in this case traffic on udp/51820 will be rejected.

3. Applying firewall rules

To secure the VM from unwanted access, there are a couple of settings to be made:

- SSH connection must only be established via the WireGuard interface while connected to the VPN server.

- HTTP/S connections must also only be allowed via WireGuard interface.

- VPN access is possible for all IP addresses but only on port 51820 (the clients' public keys have to be added to the WireGuard server configuration of course)

- All ports other than 51820 need to be closed, also port 22, which means you can only SSH onto the machine while connected to the VPN.

Those rules could be applied manually via the CLI but again, it makes sense to collect them in a file rules.nft and apply it with the help of an Ansible script.

The rules.nft for the requirements listed above can look like this:

#!/usr/sbin/nft -f

# Flush the existing ruleset

flush ruleset

table ip filter {

chain INPUT {

type filter hook input priority 0;

policy accept;

udp dport 51820 counter accept

tcp dport 22 counter accept

iifname "wg0" counter accept

ct state related,established counter accept

counter reject

}

chain FORWARD {

type filter hook forward priority 0;

policy accept;

iifname "wg0" counter accept

ct state related,established counter accept

}

chain OUTPUT {

type filter hook output priority 0;

policy accept;

udp dport 53 counter accept

}

}

table ip nat {

chain PREROUTING {

type nat hook prerouting priority -100;

policy accept;

}

chain INPUT {

type nat hook input priority 100;

policy accept;

}

chain OUTPUT {

type nat hook output priority -100;

policy accept;

}

chain POSTROUTING {

type nat hook postrouting priority 100;

policy accept;

oifname "ens3" ip saddr 10.10.0.0/24 counter masquerade

}

}Input will only be accepted on udp/51820 but for all IP addresses. When connected to the wg0 interface, there are no traffic restrictions. It is also important for future steps to also allow FORWARDing traffic from my laptop via the jumphost to the target GCP VM.

All other incoming traffic will be REJECTed. Why not DROP the traffic? Peter Benie discusses this more in depth in his blog article. But to summarize it: REJECT is a graceful exit in which a user is informed if a port is unreachable while DROP lets a user run into a blackhole and simply timeouts after a given time. However, for sophisticated port scanning tools like nmap there is no additional security in dropping unwanted traffic while making it harder for legitimate applications to debug errors.

Also: Don't forget to persistently save your settings by copying your rules to /etc/nftables.rules and making sure that nftables starts at every reboot.

The playbook for applying those rules could look like this:

---

- name: Deploy and apply nftables rules on Oracle OCI VM

hosts: wg_server

become: yes

tasks:

- name: Install nftables

apt:

name: nftables

state: present

update_cache: yes

- name: Start and enable nftables service

systemd:

name: nftables

state: started

enabled: yes

- name: Copy nftables rules file to VM

copy:

src: rules.nft

dest: /etc/nftables.conf

owner: root

group: root

mode: '0644'

- name: Apply nftables rules from file

ansible.builtin.shell: "nft -f /etc/nftables.conf"

- name: Stop and disable iptables if installed

systemd:

name: iptables

state: stopped

enabled: no

ignore_errors: yes

when: ansible_facts.packages['iptables'] is defined

- name: Ensure nftables is enabled and running

systemd:

name: nftables

state: started

enabled: yes

- name: Enable nftables rules to apply on reboot

copy:

src: /etc/nftables.conf

dest: /etc/nftables.rules

remote_src: yes

- name: Ensure nftables is persistent across reboots

systemd:

name: nftables

state: reloadedWatch Out: Better remove the SSH access on tcp/22 after running the Ansible scripts to reduce the attack surface. However, this also means that you would have to adapt your Ansible inventory file to use the IP address given in the VPN configuration for future connections.

4. Out-of-bands connection in case you locked yourself out

A word of caution is necessary here: It is possible that you lock yourself out of your VM forever and make it inaccessible by normal means. E.g. you persistently apply firewall rules that only allow connections on port udp/51820 and restart the VM ... but then you remember that you forgot to auto-start your VPN server and are now incapable of connecting to the jumphost. This is actually not unusual and might happen several times per day in cloud organizations. Therefore, out-of-band management allows to access your infrastructure on a separate channel than the production enviroment. Usually this can be a serial console or a terminal server dedicated specifically for those kind of incidents.

Oracle realizes this by creating a console connection or cloud shell connection ... at least they claim that is a viable option. You can only upload a SSH public key, not a private key. They prompt you for using your user credentials for remotely accessing the VM, so you try your username ... and then you remember that you have never set a password for it and that this option is also not viable.

My hint is: Set a user password before applying those strict firewall rules. You want to be able to access the jumphost again later on. It shall not be regarded as a disposable VM that you can easily tear down and spin up again every other day. Oracle says that by default there is no password for the users due to security reasons, which might make sense if the VM if SSH is used without keys and not hidden behind a VPN. If anybody of you found out how to upload your SSH private key to the ouf-of-bands connection or any other way to circumvent being locked out - please let me know!

5. Configuration of ssh-config, setting DNS entries and forwarding requests

So your firewall is now configured, your VPN is set up and you tested your connection with a command like ssh -i ~/.ssh/ssh-key-oraclevm.key ubuntu@89.168.67.219. Okay, this clearly doesn't work anymore because you now have to be connected to the VPN. So you turn on your WireGuard interface and change the command to ssh -i ~/.ssh/ssh-key-oraclevm.key ubuntu@10.10.0.1. Now this does work but it is still a bit cumbersome, isn't it?

First of all, the command is long and you always need to remember the location of the SSH key and your user name. Second of all, the IP address might be easy to remember if you only have one machine but what if you spin up 10 different servers?

Let's fix the first problem and edit our ssh-config file. This is usually located at ~/.ssh/config.

Host jumphost

Hostname 10.10.0.1

User ubuntu

Port 22

IdentityFile ~/.ssh/ssh-key-oraclevm.keyYou can create an entry like this that specifies hostname, user and the path of the SSH key and makes it easier for you to SSH onto the machine by typing ssh jumphost. This is neat, however, it will still depend on the specific IP address which might change over time and then needs to be adjusted in many different places.

A better approach would be following:

Host jump.paulelser.com

User ubuntu

IdentityFile ~/.ssh/ssh-key-oraclevm.key

ForwardAgent yes

AddKeysToAgent yes

Host *.paulelser.com !jump.paulelser.com

User paul

ForwardAgent yes

AddKeysToAgent yes

ProxyJump jump.paulelser.comNow an SSH connection is to be established to the address jump.paulelser.com not to some IP address. There are also some settings necessary for using the jumhost as an intermediary and connecting to the second VM on the fly. This approach requires you to go to your domain registrar and change settings there. You need to set a DNS A record with the name jump and your IP address 10.10.0.1 in my case. This will be actualized by Google's DNS servers immediately but it might take some time to refresh the cache on your machine.

Running host -t ns paulelser.com lists all DNS servers used for your address while host jump.paulelser.com ns-cloud-c3.googledomains.com outputs the newly associated IP address for your custom DNS entry.

6. Connection to the second VM

Now I assume you have followed the next two articles and created the second VM on GCP, aswell. SSHing onto it is now trivial with the second rule in the SSH config. However, we do need to set an A class DNS entry for the target VM if we don't want to manually type in the IP address all the time. Remember that the target VM is considered to be disposable, meaning that it can be spun up and destroyed at any time which changes the IP address and makes you having to reconfigure the DNS entry.

Another thing to mention are the firewall settings on the target VM. Allow SSH access (tcp/22) only from the jumphost's IP address. This way a user has to be connected to the jumphost's VPN in order to access the disposable second target VM which creates a stronger layer of security for our purposes.